Download Here

Download HereThe next chapter of AI won't be won by building more chips—it'll be won by using them better

At Blackhorn Ventures, we've been watching a collision course form in AI infrastructure.

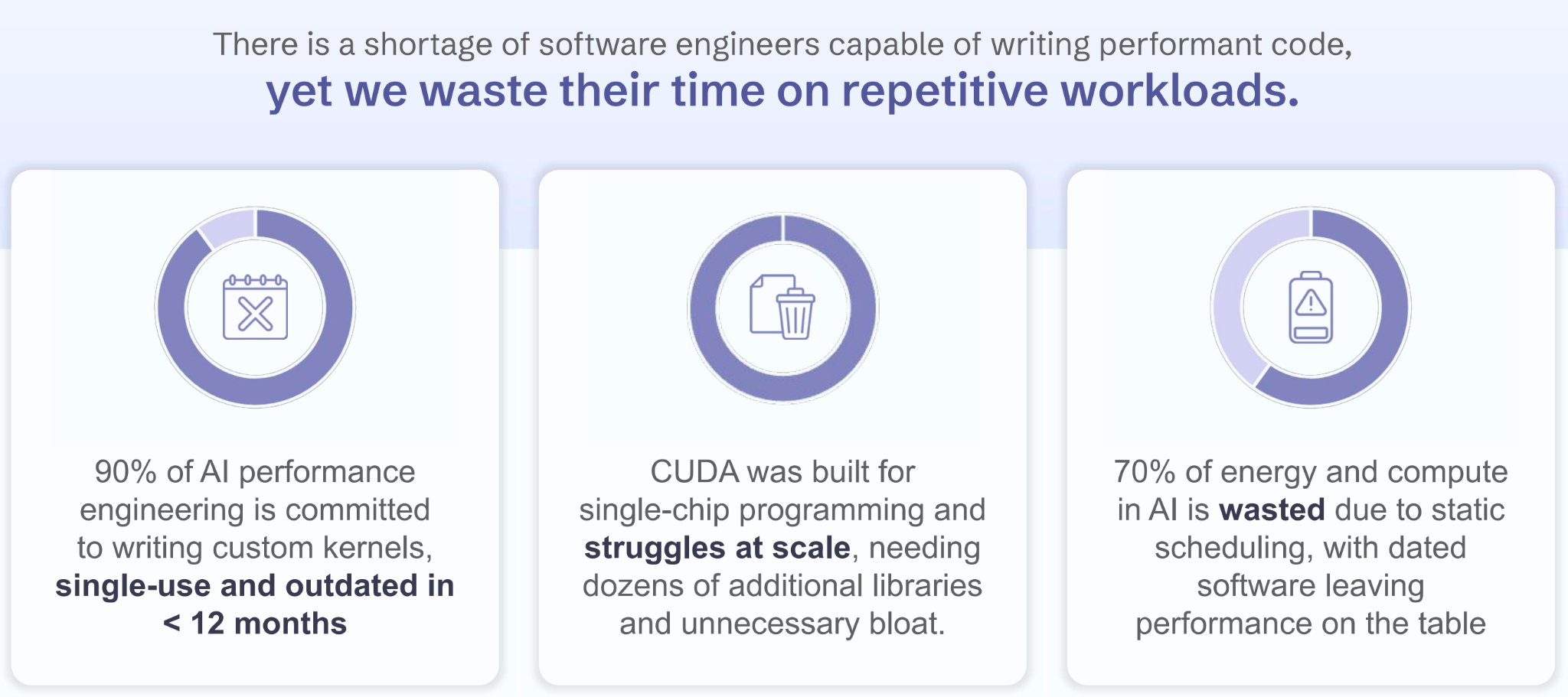

Here's what most people miss about the AI infrastructure buildout: we don't have a compute problem. We have a utilization problem. The most advanced GPUs in the world spend significant time doing nothing—waiting for data to arrive from memory.

Compute throughput has grown 60,000x over twenty years. Memory bandwidth has grown 100x. This gap—the "memory wall"—means we're building ever-more-powerful chips that can't be fed fast enough to reach their potential.

The industry's response has been predictable: build more chips, stack more memory, pour more concrete for data centers. One hyperscaler recently doubled its AI cluster power budget to 300 megawatts. That's enough electricity to power a mid-sized city.

We think there's a smarter path. That's why we're investing in Lemurian Labs.

The contrarian bet

While capital floods into chip fabrication and data center construction, we're backing a Toronto-based team that's attacking the problem from a different angle entirely: what if we could get dramatically more from the silicon we already have?

Lemurian's Diluvian stack is a ground-up rethinking of how AI workloads execute on hardware. Their compiler and runtime system takes unmodified Python/ML code and transforms it for optimal execution across heterogeneous compute—CPUs, GPUs, accelerators—without vendor lock-in and without requiring developers to change a single line of code.

The results: 20-30% reductions in energy consumption and cost-per-inference. Not through new hardware. Through intelligent orchestration of existing hardware.

How it works

The Diluvian stack operates through three integrated components:

TiDaL IR captures compute workloads as mathematical patterns rather than hardware-specific instructions—a portable representation that travels across silicon.

HADAL maps these patterns to optimal execution strategies for whatever hardware is available.

DELUGE orchestrates data movement in real-time, minimizing the memory latency that causes chips to sit idle.

This is infrastructure software that makes hardware investments go further. In a world where DRAM accounts for 40%+ of data center power consumption, that's not incremental—it's transformative.

Aligned incentives

Lemurian's business model reinforces the thesis. Their work-accomplished pricing charges customers based on throughput gains through a new compute unit (HXUs), not raw compute time. They only win when utilization improves. That's the kind of incentive structure that builds durable customer relationships.

The team

The team reads like a roster built for exactly this problem.

CEO Jay Dawani leads a founding team with roots at NVIDIA, Intel, and Microsoft. VP of Engineering Chris Vick brings three decades of engineering leadership at Qualcomm, Oracle, and Sun Microsystems—the kind of deep systems expertise that's rare and hard-won. Recent additions include Adam Robertson, dubbed "the father of DevOps," as Head of AI Infrastructure (from Control Plane Corporation and WitnessAI), and Abhay Chitral, formerly of Intel, as Head of Product.

This isn't a team learning infrastructure as they go. They've spent careers building it.

Why this, why now

At Blackhorn, we invest in digital infrastructure that drives resource efficiency across industrial systems. Lemurian sits directly at the intersection of our core themes: compute efficiency and industrial decarbonization.

But timing matters too. The market is shifting. After two years of "more chips, more data centers, more power," we're seeing investor sentiment cool on capital-intensive AI bets. The questions are changing from "how do we build more capacity?" to "how do we extract more value from existing capacity?"

Lemurian is building the answer. We're participating in their $25M Series A alongside Hexagon and Pebblebed, and we're bringing hyperscaler introductions, benchmark expertise, and go-to-market support.

The race to build AI infrastructure isn't slowing down. But the winners of the next phase will be the ones who help the industry use what we've already built.

Welcome to the Blackhorn portfolio.